WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

what is Artificial intelligence- It is the simulation of human intelligence processes by machines, especially computer systems. Specifically applications of AI include expert systems, natural language processing, speech recognition and machine vision.

How does AI work?

Accordingly, as the hype around Artificial Intelligence (AI) has accelerated, vendors have been scrambling to promote how their products and services use AI. Often what they refer to as AI is simply one component of AI, such as machine learning. Also (AI) requires a foundation of specialized hardware and software for writing and training machine learning algorithms. No one programming language is synonymous with AI, but a few, including Python, R and Java, are popular.

In general, AI systems work by ingesting large amounts of labelled training data, analyzing the data for correlations and patterns, and using these patterns to make predictions about future states. In this way, a chatbot that is fed examples of text chats can learn to produce lifelike exchanges with people, or an image recognition tool can learn to identify and describe objects in images by reviewing millions of examples.

Artificial Intelligence (AI)-

Although, the (AI) programming focuses on three cognitive skills: learning, reasoning and self-correction.

Firstly Learning processes. This aspect of AI programming focuses on acquiring data and creating rules for how to turn the data into actionable information. The rules called algorithms, provide computing devices with step-by-step instructions for how to complete a specific task.

Secondly Reasoning processes. This aspect of AI programming focuses on choosing the right algorithm to reach a desired outcome.

Lastly Self-correction processes. This aspect of AI programming designed to continually fine-tune algorithms and ensure they provide the most accurate results possible.

Why is artificial intelligence important?

AI is important because it can give enterprises insights into their operations that they may not have been aware of previously and because, in some cases, AI can perform tasks better than humans. Particularly when it comes to repetitive, detail-oriented tasks like analyzing large numbers of legal documents to ensure relevant fields filled in properly, AI tools often complete jobs quickly and with relatively few errors.

This has helped fuel an explosion in efficiency and opened the door to entirely new business opportunities for some larger enterprises. Prior to the current wave of artificial intelligence (AI), it would have been hard to imagine using computer software to connect riders to taxis, but today Uber has become one of the largest companies in the world by doing just that. It utilizes sophisticated machine learning algorithms to predict when people are likely to need rides in certain areas, which helps proactively get drivers on road before needed. As another example, Google has become one of the largest players for a range of online services by using machine learning to understand how people use their services and then improving them. In 2017, the company’s CEO, Sundar Pichai, pronounced that Google would operate as an “AI first” company.

Today’s largest and most successful enterprises have used artificial intelligence (AI) to improve their operations and gain advantage on their competitors.

What are the advantages and disadvantages of artificial intelligence (AI)?

Artificial neural networks and deep learning artificial intelligence technologies are quickly evolving, primarily because AI processes large amounts of data much faster and makes predictions more accurately than humanly possible.

While the huge volume of data being created on a daily basis would bury a human researcher, AI applications that use machine learning can take that data and quickly turn it into actionable information. As of this writing, the primary disadvantage of using AI is that it is expensive to process the large amounts of data that artificial intelligence (AI) programming requires i.e.

Advantages

- Good at detail-oriented jobs;

- Reduced time for data-heavy tasks;

- Delivers consistent results; and

- AI-powered virtual agents are always available.

Disadvantages

- Expensive;

- Requires deep technical expertise;

- Limited supply of qualified workers to build AI tools;

- Only knows what it shown;

- Lack of ability to generalize from one task to another.

Strong AI vs. weak AI

Artificial intelligence (AI) can categorized as either weak or strong.

- Weak artificial intelligence (AI), also known as narrow AI, is an AI system that designed and trained to complete a specific task. Industrial robots and virtual personal assistants, such as Apple’s Siri, use weak AI.

- Strong AI, also known as artificial general intelligence (AGI), describes programming that can replicate the cognitive abilities of the human brain. When presented with an unfamiliar task, a strong AI system can use fuzzy logic to apply knowledge from one domain to another and find a solution autonomously. In theory, a strong AI program should be able to pass both a Turing Test and the Chinese room test.

What are the 4 types of artificial intelligence (AI)?

Arend Hintze, an assistant professor of integrative biology and computer science and engineering at Michigan State University. Explained in a 2016 article that AI can categorized into four types. Beginning with the task-specific intelligent systems in wide use today and progressing to sentient systems i.e.

- Type 1: Reactive machines. These AI systems have no memory and are task specific. Also an example of Deep Blue, the IBM chess program that beat Garry Kasparov in the 1990s. Deep Blue can identify pieces on the chessboard and make predictions, but it has no memory, it cannot use past experiences to inform future ones.

- Type 2: Limited memory. These AI systems have memory, so they can use past experiences to inform future decisions. And Some of the decision-making functions in self-driving cars are designs in this way.

- Type 3: Theory of mind. Theory of mind is a psychology term. When applied to AI, it means that the system would have the social intelligence to understand emotions. This type of AI will be able to infer human intentions and predict behavior.

- Type 4: Self-awareness. So, in this category, AI systems have a sense of self, which gives them consciousness. Also machines with self-awareness understand their own current state. This type of AI does not yet exist.

How AI Used? Artificial Intelligence (AI) Examples

While addressing a crowd at the Japan AI Experience in 2017, Data Robot CEO Jeremy Achin began his speech by offering the following definition of how AI used today:

However “AI is a computer system able to perform tasks that ordinarily require human intelligence. many of these artificial intelligence systems are powered by machine learning, some of them are powered by deep learning and “some of them are powered by very boring things like rules.”

Other AI Classifications

Whereas,there are three ways to classify artificial intelligence, based on their capabilities. Rather than types of artificial intelligence, these are stages through which AI can evolve — and only one of them is actually possible right now.

- Narrow AI: Once a while, referred to as “weak AI,” this kind of AI operates within a limited context and is a simulation of human intelligence. Narrow AI is often focused on performing a single task extremely well and while these machines may seem intelligent.

- Artificial general intelligence (AGI): AGI, Once a while, referred to as “strong AI,” is the kind of AI we see in movies — like the robots from Westworld or the character Data from Star Trek: The Next Generation. AGI is also machine with general intelligence and, much like a human being.

- Superintelligence: So, This will likely to be the pinnacle of AI’s evolution. Super intelligent AI will not only be able to replicate the complex emotion and intelligence of human beings, but surpass it in every way. This could mean making judgments and decisions on its own, or even forming its own ideology.

The Future of Artificial Intelligence (AI)?

When one considers the computational costs and the technical data infrastructure running behind artificial intelligence, actually executing on AI is a complex and costly business. Fortunately, there have been massive advancements in computing technology, as indicated by Moore’s Law, which states that the number of transistors on a microchip doubles about every two years while the cost of computers is halved.

Although many experts believe that Moore’s Law will likely come to an end sometime in the 2020s, this has had a major impact on modern AI techniques — without it, deep learning would be out of the question, financially speaking. Recent research found that AI innovation has actually outperformed Moore’s Law, doubling every six months or so as opposed to two years.

By that logic, the advancements artificial intelligence has made across a variety of industries have been major over the last several years. And the potential for an even greater impact over the next several decades seems all but inevitable.

What is Artificial Intelligence (AI)? : Applications of Artificial Intelligence

Machines and computers affect how we live and work. Top companies are continually rolling out revolutionary changes to how we interact with machine-learning technology.

Deepmind Technologies, a British artificial intelligence company, was acquired by Google in 2014. The company created a Neural Turing Machine, allowing computers to mimic the short-term memory of the human brain.

Google’s driverless cars and Tesla’s Autopilot features are the introductions of AI into the automotive sector. Elon Musk, CEO of Tesla Motors, has suggested via Twitter that Teslas will have the ability to predict the destination that their owners want to go via learning their pattern or behaviour via AI.

Furthermore, Watson, a question-answering computer system developed by IBM, is designed for use in the medical field. Watson suggests various kinds of treatment for patients based on their medical history and has proven to be very useful.

Some of the more common commercial business uses of AI are:

1. Banking Fraud Detection

From extensive data consisting of fraudulent and non-fraudulent transactions, the AI learns to predict if a new transaction is fraudulent or not.

2. Online Customer Support

AI is now automating most of the online customer support and voice messaging systems.

3. Cyber Security

Using machine learning algorithms and sample data, AI can be used to detect anomalies and adapt and respond to threats.

4. Virtual Assistants

Siri, Cortana, Alexa, and Google now use voice recognition to follow the user’s commands. They collect informations, interpret what is asking, supply answer via fetched data. These virtual assistants gradually improve and personalize solutions based on user preferences.

Goals of AI

- To Create Expert Systems − The systems which exhibit intelligent behavior, learn, demonstrate, explain, and advice its users.

- To Implement Human Intelligence in Machines − Creating systems that understand, think, learn, and behave like humans.

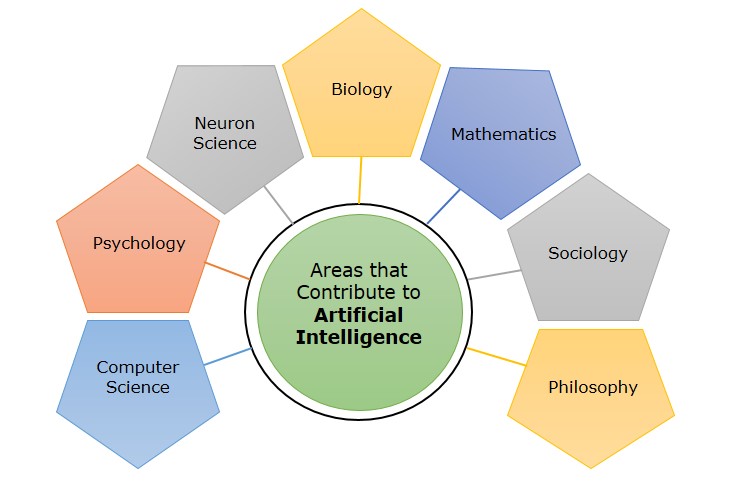

What Contributes to AI?

Artificial intelligence is a science and technology based on disciplines such as Computer Science, Biology, Psychology, Linguistics, Mathematics, and Engineering. A major thrust of AI is in the development of computer functions associated with human intelligence, such as reasoning, learning, and problem solving.

Out of the following areas, one or multiple areas can contribute to build an intelligent system.

Programming Without and With AI

The programming without and with AI is different in following ways −

Programming Without AIProgramming With AIA computer program without AI can answer the specific questions it is meant to solve.A computer program with AI can answer the generic questions it is meant to solve.Modification in the program leads to change in its structure.AI programs can absorb new modifications by putting highly independent pieces of information together. Hence you can modify even a minute piece of information of program without affecting its structure.Modification is not quick and easy. It may lead to affecting the program adversely.Quick and Easy program modification.

What is AI Technique?

In the real world, the knowledge has some unwelcomed properties −

- Its volume is huge, next to unimaginable.

- It is not well-organized or well-formatted.

- It keeps changing constantly.

AI Technique is a manner to organize and use the knowledge efficiently in such a way that −

- It should be perceivable by the people who provide it.

- It should be easily modifiable to correct errors.

- It should be useful in many situations though it is incomplete or inaccurate.

AI techniques elevate the speed of execution of the complex program it is equipped with.

Applications of AI

AI has been dominant in various fields such as −

- Gaming − AI plays crucial role in strategic games such as chess, poker, tic-tac-toe, etc., where machine can think of large number of possible positions based on heuristic knowledge.

- Natural Language Processing − It is possible to interact with the computer that understands natural language spoken by humans.

- Expert Systems − There are some applications which integrate machine, software, and special information to impart reasoning and advising. They provide explanation and advice to the users.

- Vision Systems − These systems understand, interpret, and comprehend visual input on the computer. For example,

- A spying aeroplane takes photographs, which are used to figure out spatial information or map of the areas.

- Doctors use clinical expert system to diagnose the patient.

- Police use computer software that can recognize the face of criminal with the stored portrait made by forensic artist.

- Speech Recognition − Some intelligent systems are capable of hearing and comprehending the language in terms of sentences and their meanings while a human talks to it. It can handle different accents, slang words, noise in the background, change in human’s noise due to cold, etc.

- Handwriting Recognition − The handwriting recognition software reads the text written on paper by a pen or on screen by a stylus. It can recognize the shapes of the letters and convert it into editable text.

- Intelligent Robots − Robots are able to perform the tasks given by a human. They have sensors to detect physical data from the real world such as light, heat, temperature, movement, sound, bump, and pressure. They have efficient processors, multiple sensors and huge memory, to exhibit intelligence. In addition, they are capable of learning from their mistakes and they can adapt to the new environment.

A Brief History of Artificial Intelligence

Intelligent robots and artificial beings first appeared in ancient Greek myths. And Aristotle’s development of syllogism and its use of deductive reasoning was a key moment in humanity’s quest to understand its own intelligence. While the roots are long and deep, the history of AI as we think of it today spans less than a century. The following is a quick look at some of the most important events in AI.

1940s

- (1943) Warren McCullough and Walter Pitts publish the paper “A Logical Calculus of Ideas Immanent in Nervous Activity,” which proposes the first mathematical model for building a neural network.

- (1949) In his book The Organization of Behaviour: A Neuropsychological Theory, Donald Hebb proposes the theory that neural pathways are created from experiences and that connections between neurons become stronger the more frequently they’re used. Hebbian learning continues to be an important model in AI.

1950s

- (1942) Isaac Asimov publishes the Three Laws of Robotics, an idea commonly found in science fiction media about how artificial intelligence should not bring harm to humans.

- (1950) Alan Turing publishes the paper “Computing Machinery and Intelligence,” proposing what is now known as the Turing Test, a method for determining if a machine is intelligent.

- (1950) Harvard undergraduates Marvin Minsky and Dean Edmonds build SNARC, the first neural network computer.

- (1950) Claude Shannon publishes the paper “Programming a Computer for Playing Chess.”

- (1952) Arthur Samuel develops a self-learning program to play checkers.

- (1954) The Georgetown-IBM machine translation experiment automatically translates 60 carefully selected Russian sentences into English.

- (1956) The phrase “artificial intelligence” is coined at the Dartmouth Summer Research Project on Artificial Intelligence. Led by John McCarthy, the conference is widely considered to be the birthplace of AI.

- (1956) Allen Newell and Herbert Simon demonstrate Logic Theorist (LT), the first reasoning program.

- (1958) John McCarthy develops the AI programming language Lisp and publishes “Programs with Common Sense,” a paper proposing the hypothetical Advice Taker, a complete AI system with the ability to learn from experience as effectively as humans.

- (1959) Allen Newell, Herbert Simon and J.C. Shaw develop the General Problem Solver (GPS), a program designed to imitate human problem-solving.

- (1959) Herbert Gelernter develops the Geometry Theorem Prover program.

- (1959) Arthur Samuel coins the term “machine learning” while at IBM.

- (1959) John McCarthy and Marvin Minsky found the MIT Artificial Intelligence Project.

1960s

- (1963) John McCarthy starts the AI Lab at Stanford.

- (1966) The Automatic Language Processing Advisory Committee (ALPAC) report by the U.S. government details the lack of progress in machine translations research, a major Cold War initiative with the promise of automatic and instantaneous translation of Russian. The ALPAC report leads to the cancellation of all government-funded MT projects.

- (1969) The first successful expert systems are developed in DENDRAL, a XX program, and MYCIN, designed to diagnose blood infections, are created at Stanford.

1970s

- (1972) The logic programming language PROLOG is created.

- (1973) The Light hill Report, detailing the disappointments in AI research, is released by the British government and leads to severe cuts in funding for AI projects.

- (1974-1980) Frustration with the progress of AI development leads to major DARPA cutbacks in academic grants. Combined with the earlier ALPAC report and the previous year’s Light hill Report, AI funding dries up and research stalls. This period is known as the “First AI Winter.”

1980s

- (1980) Digital Equipment Corporations develops R1 (also known as XCON), the first successful commercial expert system. Designed to configure orders for new computer systems, R1 kicks off an investment boom in expert systems that will last for much of the decade, effectively ending the first AI Winter.

- (1982) Japan’s Ministry of International Trade and Industry launches the ambitious Fifth Generation Computer Systems project. The goal of FGCS is to develop supercomputer-like performance and a platform for AI development.

- (1983) In response to Japan’s FGCS, the U.S. government launches the Strategic Computing Initiative to provide DARPA funded research in advanced computing and AI.

- (1985) Companies are spending more than a billion dollars a year on expert systems and an entire industry known as the Lisp machine market springs up to support them. Companies like Symbolics and Lisp Machines Inc. build specialized computers to run on the AI programming language Lisp.

- (1987-1993) As computing technology improved, cheaper alternatives emerged and the Lisp machine market collapsed in 1987, ushering in the “Second AI Winter.” During this period, expert systems proved too expensive to maintain and update, eventually falling out of favour.

1990s

- (1991) U.S. forces deploy DART, an automated logistics planning and scheduling tool, during the Gulf War.

- (1992) Japan terminates the FGCS project in 1992, citing failure in meeting the ambitious goals outlined a decade earlier.

- (1993) DARPA ends the Strategic Computing Initiative in 1993 after spending nearly $1 billion and falling far short of expectations.

- (1997) IBM’s Deep Blue beats world chess champion Gary Kasparov.

2000s

- (2005) STANLEY, a self-driving car, wins the DARPA Grand Challenge.

- (2005) The U.S. military begins investing in autonomous robots like Boston Dynamics’ “Big Dog” and iRobot’s “Pack Bot.”

- (2008) Google makes breakthroughs in speech recognition and introduces the feature in its iPhone app.

2010s

- (2011) IBM’s Watson handily defeats the competition on Jeopardy!.

- (2011) Apple releases Siri, an AI-powered virtual assistant through its iOS operating system.

- (2012) Andrew Ng, founder of the Google Brain Deep Learning project, feeds a neural network using deep learning algorithms 10 million YouTube videos as a training set. The neural network learned to recognize a cat without being told what a cat is, ushering in the breakthrough era for neural networks and deep learning funding.

- (2014) Google makes the first self-driving car to pass a state driving test.

- (2014) Amazon’s Alexa, a virtual home smart device, is released.

- (2016) Google DeepMind’s AlphaGo defeats world champion Go player Lee Sedol. The complexity of the ancient Chinese game was seen as a major hurdle to clear in AI.

- (2016) The first “robot citizen,” a humanoid robot named Sophia, is created by Hanson Robotics and is capable of facial recognition, verbal communication and facial expression.

- (2018) Google releases natural language processing engine BERT, reducing barriers in translation and understanding by ML applications.

- (2018) Waymo launches its Waymo One service, allowing users throughout the Phoenix metropolitan area to request a pick-up from one of the company’s self-driving vehicles.

2020s

- (2020) Baidu releases its Linear Fold AI algorithm to scientific and medical teams working to develop a vaccine during the early stages of the SARS-CoV-2 pandemic. The algorithm is able to predict the RNA sequence of the virus in just 27 seconds, 120 times faster than other methods.

- (2020) Open AI releases natural language processing model GPT-3, which is able to produce text modelled after the way people speak and write.

- (2021) Open AI builds on GPT-3 to develop DALL-E, which is able to create images from text prompts.

- (2022) The National Institute of Standards and Technology releases the first draft of its AI Risk Management Framework, voluntary U.S. guidance “to better manage risks to individuals, organizations, and society associated with artificial intelligence.”

- (2022) DeepMind unveils Gato, an AI system trained to perform hundreds of tasks, including playing Atari, captioning images and using a robotic arm to stack blocks.

FOR MORE INFORMATIVE BLOGS VISIT @lgseducate.com