If you don't know where to begin, getting the most out of the vast amount of collected data can be daunting in today's digital age. Although there are many ways to analyze and use collected data, data warehousing is the most effective. Companies are bound to struggle with the increasingly competitive market if they do not implement data warehouses in their business.

But wait, how are data warehousing and Apache Hive related? Why are we giving so much importance to data warehousing when this blog is about Apache Hive?

Well, to answer this, let’s understand what Apache Hive is.

What is Apache Hive?

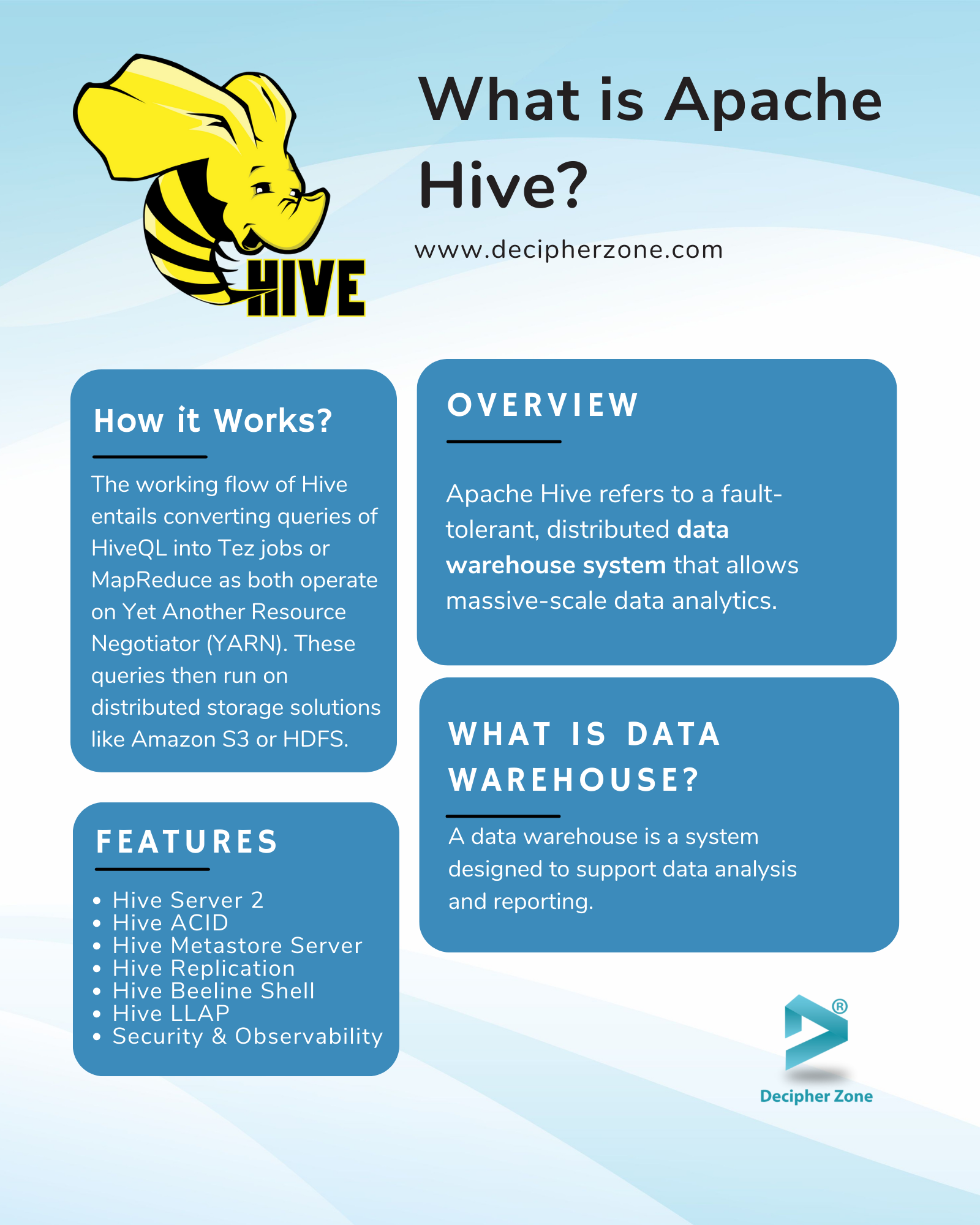

Apache Hive refers to a fault-tolerant, distributed data warehouse system that allows massive-scale data analytics. The Hive Metastore of Apache Hive provides a central metadata repository that can be analyzed to make data-driven, informed decisions.

Read: Introduction to Apache Beam Using Java

Note: A data warehouse is a system designed to support data analysis and reporting. In business intelligence, it is one of the most important components.

Hive is built on top of Apache Hadoop (an open-source framework to store and process large datasets) and provides support for Amazon S3, Azure Data Lake Storage, etc. through Hadoop Distributed File System (HDFS). It enables data scientists to read, write, and manage petabytes of data using SQL.

How Does Apache Hive Work?

Apache Hive was designed and developed to help non-programming individuals with SQL knowledge to handle massive amounts of data, even in the petabyte range. Hive does so by offering an interface similar to SQL, called HiveQL. Unlike traditional relational databases that were developed to provide quick queries on small datasets, Hive helps in processing large datasets through batch processing.

Read: What is Apache Pinot Architecture

The working flow of Hive entails converting queries of HiveQL into Tez jobs or MapReduce as both operate on Yet Another Resource Negotiator (YARN) - a distributed job scheduling framework of Apache Hadoop. These queries then run on distributed storage solutions like Amazon S3 or HDFS.

Both table metadata and database in Hive are stored at Metastore which enables easy data discovery and abstraction.

Read: What is Apache Druid Architecture

Within the Hive ecosystem, there's an additional component known as HCatalog. This layer handles table and storage management, drawing data from the Hive metastore. HCatalog's role is to seamlessly integrate Hive with other tools like MapReduce. This integration is made possible by using the same data structures as Hive, which eliminates the need to redefine metadata for each engine.

Read: Apache Druid vs Apache Pinot

For external applications and third-party integrations, there's a tool called WebHCat. It provides a RESTful API for interacting with HCatalog, enabling convenient access to Hive metadata, which can be reused for various purposes.

Key Features of Apache Hive

Now that we have a better understanding of what Apache Hive is and how it works, let’s check out its core features as well.

Read: Apache Kafka

- Hive Server 2 (HS2): It is a service that allows users to execute queries, i.e., it accepts incoming requests from clients/applications, creates a plan for processing, and auto-generates a YARN job. H2S is a single process that runs as a composite service with a Jetty web server and Thrift Hive Service for web UI. It also streamlines data processing and extraction using Hive optimizer and compiler.

- Hive ACID: ACID describes database transactional traits, namely, Atomicity, Consistency, Isolation, and Durability. Apache Hive provides full ACID support for Optimized Row Columnar (ORC) tables. It helps to improve data ingestion, slow-changing dimensions, bulk updates, and data resentment.

- Hive Metastore Server (HMS): It acts as a central metadata repository for Hive partitions and tables in a relational database. HMS allows clients/applications to access this information through the metastore service API.

- Hive Replication: Apache Hive supports backup and recovery using incremental replication and bootstrap.

- Hive Beeline Shell: Hive has a command-line interface (CMD), called Hive Beeline Shell, that allows users to run HiveQL statements. It also runs Hive Open Database Connectivity and Java Database Connectivity drivers to execute queries from ODBC or JDBC applications.

- Hive LLAP: Through Low Latency Analytical Processing (LLAP), Hive enables subsecond and interactive SQL, making Hive faster for optimized data caching and persistent query infrastructure.

- Security and Observability: Kerberos auth integrated with Apache Atlas and Apache Ranger in Hive enhances its data security and observation capabilities.

Read: Top 5 Data Streaming Tools

Benefits of Apache Hive

Some of the benefits of Apache Hive are as follows:

- Hive can easily handle massive data volumes and allow efficient processing of distributed large datasets, making it a scalable solution for big data processing.

- With Hive one can easily structure and organize data into databases, tables, and partitions for better data warehousing and management tasks.

- It supports different file formats such as ORC, Parquet, etc. for columnar storage and compression, enhancing storage efficiency and query speed.

- Hive has a familiar SQL-like interface for analyzing and querying datasets, making it easier for users to work with big data if they are familiar with SQL.

- With Hive, you can transform and cleanse raw data into usable formats for analysis using ETL (Extract, Transform, Load) operations.

- Using Hive, multiple users or teams can work simultaneously while maintaining data isolation and access control.

- As Hive can operate on both commodity hardware and cloud services, it reduces the cost associated with data processing and storage for an organization.

Read: Java For Data Science

Conclusion

In essence, by allowing organizations to process and analyze large datasets using familiar SQL-like queries, Apache Hive is a versatile tool within the Hadoop ecosystem for a variety of data-related tasks.

So that was all about Apache Hive. We hope that you enjoy reading the blog and find it informative and helpful. And if you have any questions or want to integrate Apache Hive into your business, then get in touch with our team of experts now and get a customized quote.

FAQs: What is Apache Hive

What is Apache Hive used for?

Organizations use Apache Hive for large-scale data processing, data analysis and reporting, data transformation, data warehousing, ETL, log processing, Ad Hoc queries, data exploration, data archiving, recommendation systems, market analysis, machine learning, and more.

What is the difference between Hadoop and Hive?

Hadoop is a framework used to process Big Data, while Hive is a data warehousing, SQL-based tool built over Hadoop to query data using Hive Query Language.

What is the difference between Spark and Hive?

Spark is a real-time analyzing platform with the ability to perform in-memory, complex analytics whereas the Hive data warehouse platform allows you to read, write, and manage large-scale HDFS-stored data sets.