Artificial Intelligence is being actively incorporated into our lives in various forms, winning new fields of application on a regular basis. Dozens of innovations that make our lives easier are based on this technology. Autonomous cars, smart refrigerators, machine translation are just a few examples. Machine learning is embedded in the mechanism of Magento 2 PWA building, which makes websites faster, safer, and more convenient to use. In addition to this, an integral part of artificial intelligence development is conversational AI.

This article explains how conversational AI relies on a confidence score and what can be done to improve it.

What Conversational AI Is & Where It Is Used

Conversational AI encompasses a set of technologies, large volumes of data, machine learning, and natural language processing to mimic human conversation. There is a growing use of it in AI chatbots and voice assistants to which users can talk to.

The principal difference in which conversational AI outshines traditional chatbot solutions is that it makes machines capable of understanding, processing, and responding in a human-like way.

Conversational AI, besides online chatbots and voice assistants for customer support, also finds its way into other areas to help businesses become more profitable. For example, conversational AI can:

- ensure company accessibility for users through text-to-speech dictation and language translation;

- optimize HR processes in employee training and health care services in claim processing;

- you may probably come across AI-powered search autocomplete on Google and spell-check;

- moreover, such applications as Amazon Alexa, Apple Siri, and Google Home operate on automated speech recognition to interact with end-users.

Confidence Scores in Conversational AI and Chatbots

To convert speech to text and text to meaning, voice-based intelligent virtual systems use templates that automatically make decisions. However, the system is not immune to errors.

If you make a request in a crowded place, and there is a lot of noise around, the accuracy of speech-to-text translation will suffer. And if your wording is vague, the text-to-meaning confidence in the text will be low. Any scenario affects the final result of the system and, as a result, the reaction of AI in the conversation.

The process usually works the following way:

- The conversational bot receives the request.

- Then it compares the entered data with all statements or training phrases in the system.

- If your request most closely matches the predefined questions, you will receive the most correct answer.

But How Does the System Determine the Confidence Score?

The NLU system calculates a value from zero to one for each intent. This value is known as a confidence score. By using a confidence threshold, you can determine whether the system labeled your answers correctly or not.

A confidence threshold set on 0 means the system will accept all the inputs it receives from the user. In this case, there may be plenty of mistakes. For example, if the assistant was not trained to give the answer to the question "What colors of cars do you have?" it will proceed with answering another request that it believes is close to it. In some cases, the assistant can even consider a question like "What is the fastest car?" to be a close match which is, obviously, off-topic.

By increasing the threshold, the number of possible variants will gradually fall down. At the peak of the threshold, which is 1, the system will accept only the most precise variants that coincide with the pre-set hypotheses.

As a rule, users see the best-fit intent value that exceeds the threshold. If not, the system generates a default message.

In this screenshot, we see the behavior of an intelligent chatbot that received a previously unknown response. But despite being stuck, it suggests the user help or continuation with the request. This allows to not end the dialogue and thereby not to lose a potential client.

Image credit: Medium

Human-in-the-Loop

Most AI applications employ statistical models to make predictions. This variant proceeds from the fact that the system calculates a confidence score on its own based on the processes embedded in it.

If the system is not confident enough in the request received, you can use an alternative approach, such as “human-in-the-loop”. To put it in another way, it relies on a human and then adds that human judgment to its model to become smarter.

This simple pattern solves one of the biggest problems in machine learning. It allows you to get the algorithm with an accuracy of 99 percent!

Let’s take the “Big 4” accounting firms PwC, EY, Deloitte & KPMG as an example. They implement human-in-the-loop machine learning architectures to ensure consistency.

Thresholds Scenarios

Thresholds work in such a way that it is necessary to find the optimal balance between Accuracy and Coverage.

- a) If you prioritize Accuracy (or precision), then you need to increase your threshold.

This involves a more exact match to the user's request, but the least number of accepted requests. For example, the system will no longer be able to recognize the query “I would like to buy a book” instead of “I want to buy a book”. - b) If Coverage (or recall) is in the first place and partial relation to the user's question is acceptable, you need to lower the threshold value.

The best option would be a value at which the system rejects the most incorrect theories and accepts as many correct hypotheses as possible.

Parameters for Determining a Confidence Score

To determine the optimal confidence score for your particular case, the author of an article on the Genesys blog suggests taking into account 4 parameters:

- In-domain hypothesis, that the system correctly accepted and didn't require user confirmation (ID-CA)

- In-domain hypothesis, correct and confirmed by the user when prompted (ID-CC)

- In-domain hypothesis, that was false and wrongly accepted by the system without prompting (ID-FA)

- In-domain false hypothesis, that the user rejected when prompted (ID-FR)

The best behavior of the system would be option 1. It is undesirable to bore the user with the need to respond to confirmation requests like in the case of 2 and 4. Yet, options 2 and 4 are acceptable, as opposed to completely erroneous option 3.

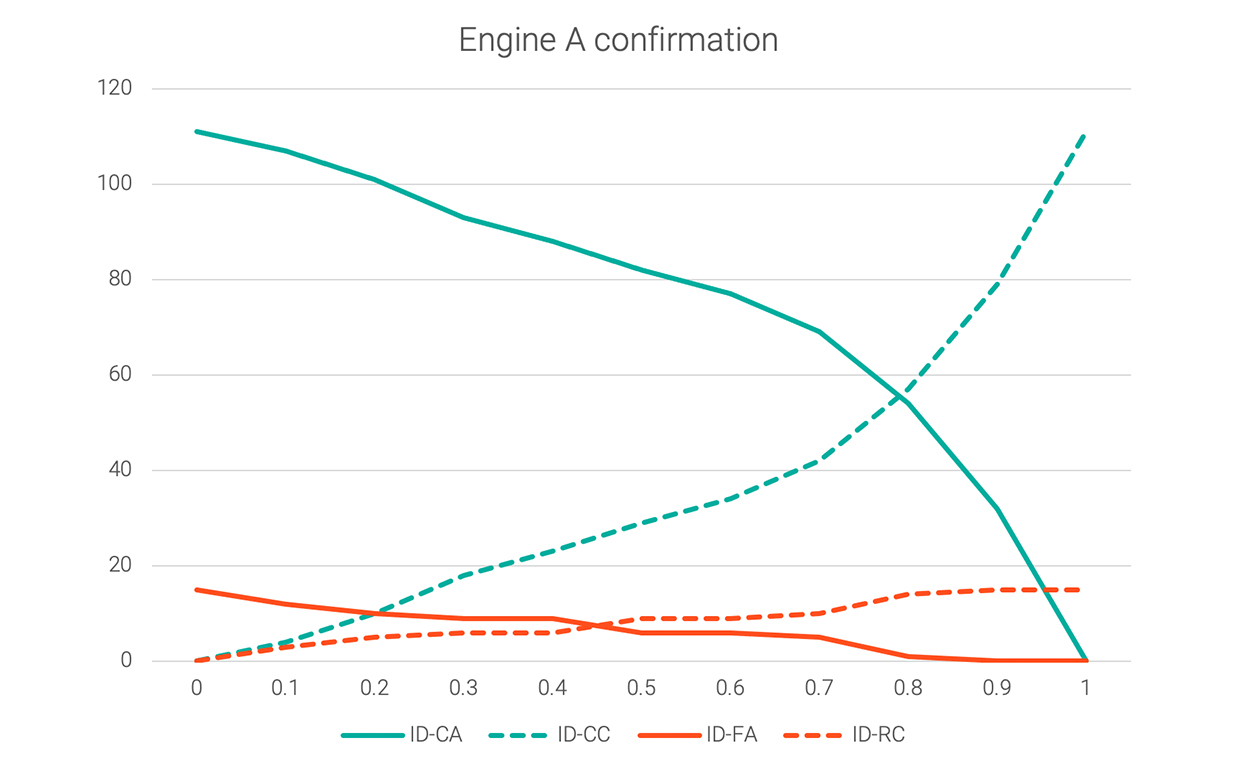

On the graph, you can see how the system behaves in a particular case at each increment of 0.1 between 0 and 1.0 confidence.

Image credit: Genesys

According to the research, if the confirmation threshold is set to 0, both correct-accepts (best) and false-accepts (worst) will have the largest number of valid hypotheses. By increasing the confirmation threshold to 0.8, the number of errors will be reduced to a minimum. But in more than half of the cases, the system will require user confirmation.

The ideal value could be around 0.25. Here we observe a significant reduction in the number of wrongly accepted hypotheses. Notwithstanding, the number of those that have been correctly accepted has not dropped much yet. In the end, the decision may depend on the specific application. That is how undesirable the number of incorrectly accepted requests is and how much it is permissible to ask the user an extra question.

How Can Businesses Improve Their Confidence Score?

AI chatbots rely on conversational AI. Conversational AI, in its turn, encompasses a set of technologies, large volumes of data, machine learning, and natural language processing to automate conversations.

It means that intelligent chatbots learn with time. So when a chatbot receives the request it is not sure about, it sends the input to a live agent. Then it will analyze the live agent’s answer, make conclusions, and become smarter.

As time goes, the chatbot will get better at distinguishing and successfully processing various user queries that were submitted using different wording. In turn, this will raise customer satisfaction and at the same time help the bot in accomplishing its other mission, unburdening the workloads of a company's live support team. This, in much, explains why businesses shouldn’t ignore the importance of the confidence score in conversational AI and try to improve it.

The key point in improving confidence scores is to use scoring with reinforcement learning. What is implied here is that chatbots need to be tested with end-users to maximize request recognition. Thus, the bot will interact with the training phrases as much as possible and enter them into its memory.

Final Word

Artificial intelligence is already changing all aspects of how a business operates. There is a growing demand among customers for easy and natural communication between a human and a computer. Enterprises, in their turn, focus more on customer satisfaction. So, in the future, conversational AI bots will replace regular chatbots. It will be a cognitive bot that can decode complex scenarios and recognize human sentiments.

However, human-computer interaction is critical to the operation of all machine learning algorithms-based applications. At the end of the day, it produces the best results, not the superiority of one over the other. In this combination, the role of confidence scores is obvious and undeniable. They help to indicate which utterances should be annotated by humans thus allowing the bot to go on with the conversation and not just reply with "Could not find anything."